1. Formalized decision process → Conflict about criteria

It’s usually a good idea to establish a methodology for making repeatable, complex decisions. But inevitably you’ll have to allow wiggle room for the unquantifiable or the unexpected; leaving this gray area exposes you to criticism that it’s not a rigorous methodology after all. Other sources of criticism are the weighting and the calculations applied in your decision formulas – and the extent of transparency provided.

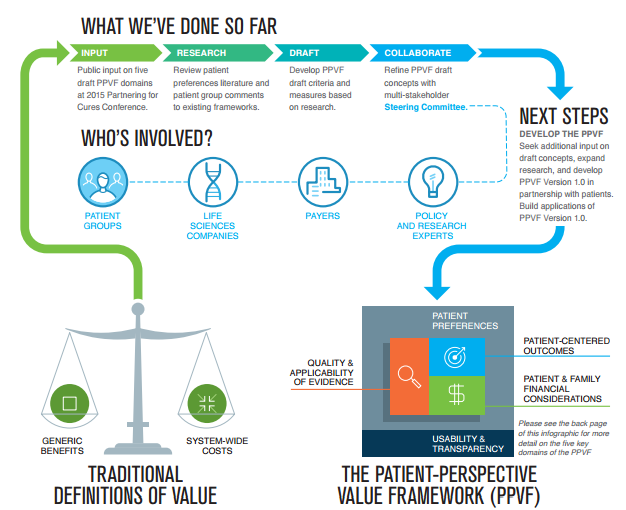

How do you set priorities? In healthcare, how do you decide who to treat, at what cost? To formalize the process of choosing among options, several groups have created so-called value frameworks for assessing medical treatments – though not without criticism. Recently Ugly Research co-authored a post summarizing industry reaction to the ICER value framework developed by the Institute for Clinical and Economic Review. Incorporation of patient preferences (or lack thereof) is a hot topic of discussion.

To address this proactively, Faster Cures has led creation of the Patient Perspective Value Framework to inform other frameworks about what’s important to patients (cost? impact on daily life? outcomes?). They’re asking for comments on their draft report; comment using this questionnaire.

2. Analytics → Better tenure decisions

New analysis in the MIT Sloan Management Review observes “Using analytics to improve hiring decisions has transformed industries from baseball to investment banking. So why are tenure decisions for professors still made the old-fashioned way?”

Ironically, academia often proves to be one of the last fields to adopt change. Erik Brynjolfsson and John Silberholz explain that “Tenure decisions for the scholars of computer science, economics, and statistics — the very pioneers of quantitative metrics and predictive analytics — are often insulated from these tools.” The authors say “data-driven models can significantly improve decisions for academic and financial committees. In fact, the scholars recommended for tenure by our model had better future research records, on average, than those who were actually granted tenure by the tenure committees at top institutions.”

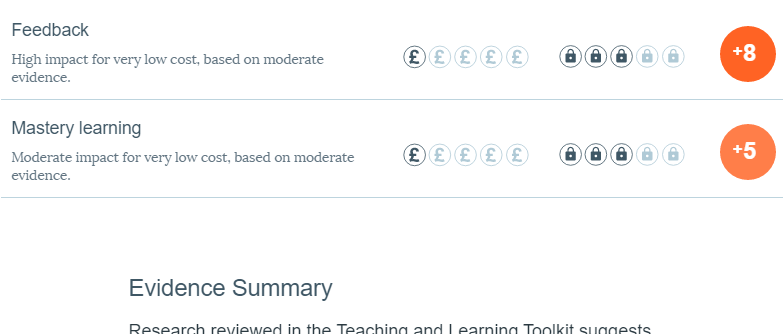

3. Visuals of research findings → Useful evidence

The UK Sutton Trust-EEF Teaching and Learning Toolkit is an accessible summary of educational research. The purpose is to help teachers and schools more easily decide how to apply resources to improve outcomes for disadvantaged students. Research findings on selected topics are nicely visualized in terms of implementation cost, strength of supporting evidence, and the average impact on student attainment.

4. Absence of patterns → File-drawer problem

We’re only human. We want to see patterns, and are often guilty of ‘seeing’ patterns that really aren’t there. So it’s no surprise we’re uninterested in research that lacks significance, and disregard findings revealing no discernible pattern. When we stash away projects like this, it’s called the file-drawer problem, because this lack of evidence could be valuable to others who might have otherwise pursued a similar line of investigation. But Data Colada says the file-drawer problem is unfixable, and that’s OK.

5. Optimal stopping algorithm → Practical advice?

In Algorithms to Live By, Stewart Brand describes an innovative way to help us make complex decisions. “Deciding when to stop your quest for the ideal apartment, or ideal spouse, depends entirely on how long you expect to be looking…. [Y]ou keep looking and keep finding new bests, though ever less frequently, and you start to wonder if maybe you refused the very best you’ll ever find. And the search is wearing you down. When should you take the leap and look no further?”

Optimal Stopping is a mathematical concept for optimizing a choice, such as making the right hire or landing the right job. Brand says “The answer from computer science is precise: 37% of the way through your search period.” The question is, how can people translate this concept into practical steps guiding real decisions? And how can we apply it while we live with the consequences?

Posted by Tracy Allison Altman on 28-Dec-2016.