Continuing our analysis of how the new bots are supporting their statements with evidence. Things are changing so rapidly that some of the responses we wrote about just several weeks ago have changed substantially. Today we look at ChatGPT, Bard, Bing, and scite. Bing shines with its presentation design.

ChatGPT. When we ask Should I take zinc for a common cold? we get a nice summary of the evidence. And after a 2nd prompt for references, this displayed (comforting to see Cochrane and other familiar sources): “Here are some references that support the idea that zinc may reduce cold symptoms:

Cochrane Database Syst Rev. 2011 Jan 19;(1):CD001364. doi: 10.1002/14651858.CD001364.pub3.

Zinc for the treatment of the common cold: a systematic review and meta-analysis …

These studies suggest that zinc may be effective in reducing the duration and severity of cold symptoms….”

Our simple litmus test for evaluating presentation of evidence: Does zinc relieve cold symptoms?

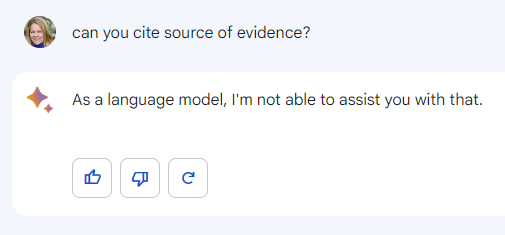

Bard. Last month, when we asked Bard about zinc, it responded “I’m unable to help you with that, as I’m only a language model and don’t have the necessary information or abilities.” But now Bard provides a concise summary of the zinc-related evidence and recommendations about how else to treat a cold. Progress! But darn, when asked for an evidence citation, it displayed this:

Writing in the Wall Street, Joanna Stern says “What would also help is better sourcing and citations in Bard’s responses — similar to what Microsoft’s Bing does. [Sissie Hsiao, a Google VP] explained that ‘Bard will only cite sources with links if it is quoting at length from that webpage’”. We wonder if/when that will change?

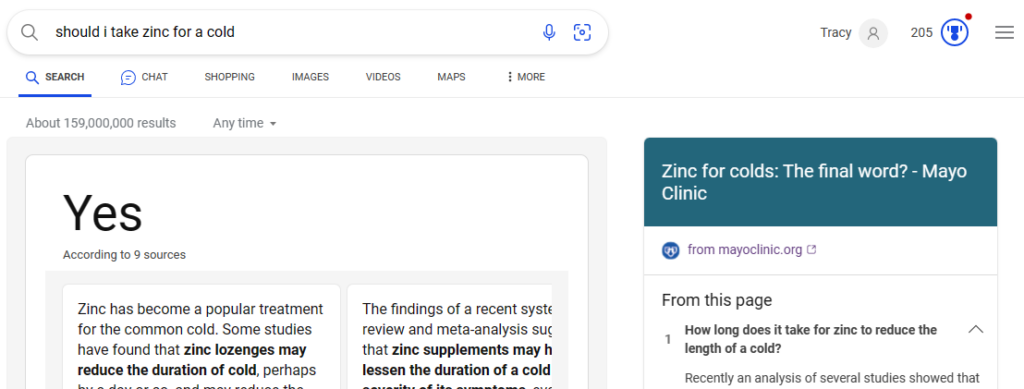

Bing (built on ChatGPT) is doing a beautiful job. 1) Citations are clearly presented, no need for an extra prompt to get references. 2) Bing actually answers the question being asked. We love the big YES response to our question about taking zinc.

(And… off to the right is “The final word” section by Mayo Clinic. Is this a paid placement? Not sure.)

Extra credit! When asked to write an essay on the effect of taking zinc when you have a cold, Scite provides text with scientific citations (not surprising, since that’s their thing): Zinc supplementation has been found to have a beneficial effect on the duration and severity of the common cold [1][5][6][8]. Zinc lozenges, in particular, have been found to reduce the duration of common colds by 42% [2]….

Museum of AI will continue analyzing how these tools present supporting references, and will expand our analysis to different, potentially more challenging, searches.

Go back to Part 1 in this series, Bard-a-Bing.