Now that machines are answering questions and making decisions, it’s essential that people and AI get on the same page when citing evidence. What sources are considered acceptable? Where should citations be presented (inline, in conventional bibliographies, or…)? Should data/analysis be simply referenced, or transparently included in supporting data sets? Here we look at state-of-the-art examples from generalized AI.

What does it mean to cite evidence?

Cite: To quote or refer to (a book or author, for example) as an authority or example in making an argument…. To mention or bring forward as support, illustration, or proof. (American Heritage Dictionary)

On the human side, we have generally agreed-upon methods of citing evidence/sources when presenting findings or arguments. See examples for scientists, for attorneys, for Wikipedia contributors, and for students. (Sadly, not everyone plays along, and the list of “acceptable” sources is often up for grabs.)

“Is this the golden age of evidence-based misinformation?”

On the AI side, it’s a work in progress, to put it mildly. Evidence-based misinformation is a substantial risk as we rely more on artificial intelligence/machine learning. Apps often provide information without linking to sources. Now the most sophisticated consumer-facing bots are beginning to cite evidence supporting their stated claims, in a move toward accountability. But state-of-the-art generalized AI/search still produces plenty of flawed statements. People might be tempted to believe an algo is producing fact-checked or evidence-based responses, without ever knowing how adequately they represent cumulative knowledge.

When prompted about how it cites evidence, ChatGPT responded that “As an AI language model… [it] does not have the ability to conduct original research or cite evidence in the traditional sense. However, ChatGPT can provide information based on the patterns it has learned from the text data, which may include references to specific sources or studies…. It’s always a good idea to verify information from multiple sources and consult with experts when making important decisions based on that information.”

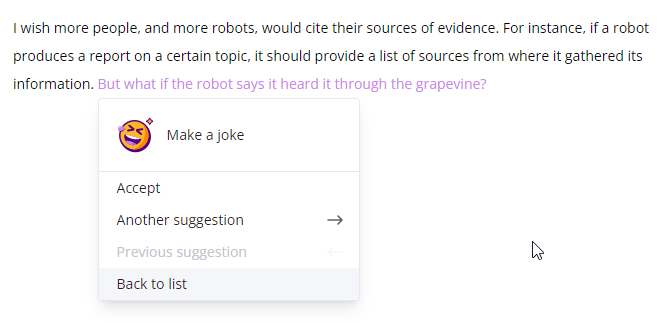

But bright spots abound: The writing assistant Wordtune Spices helps authors cite supporting evidence when making factual statements. Meta has a research model for gathering up citations and helping human Wikipedia editors review them (more below). Of course, numerous specialized algorithms inform very specific decisions, such as treatment of atrial fibrillation ~ atop an evidence base.

Guidelines for citing evidence

- Systematically cite supporting sources: Numbers and facts don’t speak for themselves

- Point specifically to supporting statements/data vs. linking to source articles, reports

- Provide evidence appropriate to the type of claim being supported (e.g., factual knowledge, value judgment, or proposed solution)

- Avoid cherry-picking evidence: Acknowledge whether findings are conclusive, or if reasonable doubts and counter arguments remain

What does it mean to state a claim?

Claim: To state to be true, especially when open to question; assert or maintain. (American Heritage Dictionary)

Stating Facts/Knowledge Oftentimes these claims are easily fact-checked, such as saying that scientists named a fungus after SpongeBob SquarePants. Sometimes statements synthesize a set of facts into derived knowledge that is relatively easy to falsify or confirm. For instance, health information about the importance of dietary folic acid to avoid birth defects. In either case, a human could likely find the original source in a library, fact-check using Wikipedia, or use search engines.

Keep in mind that Fact/Knowledge statements aren‘t necessarily true, and are better viewed as propositions of fact. “The word ‘fact’ here means that a claim is either true or false in a potentially verifiable way” (Herrick, James A., Argumentation, Allyn and Bacon:1995, page 15). We‘ve quoted an out-of-print book to highlight how sources have traditionally been cited.

Experimenting with a factual claim

Let’s look at ChatGPT. Our prompt: Did scientists in 2012 name a new fungus after SpongeBob SquarePants? AI response: “Yes…. The scientists named the fungus after SpongeBob SquarePants due to its sponge-like appearance, and as a nod to the fun and lighthearted nature of the cartoon character. Naming new species after popular cultural figures is not uncommon in the scientific community and is often done to generate public interest and raise awareness….” [2-feb-2023] Alas, no citation.

Follow-up prompt: Please cite your source. ChatGPT provided a reasonable-looking list of three references. Then things got sticky: Two links (from the Telegraph and LiveScience) mentioned SpongeBob but threw 404 errors (darn you, Internet). The third correctly pointed to the journal Mycologia and correctly named the 2011 (not 2012) article title ~ but the authorship was only 67% correct, and its URL goes to a different Mycologia article. For this factual claim, the AI is solidly in the ballpark, not too shabby for early days.

Wishlist item #1: ChatGPT providing references without being prompted for them.

How should an AI present its evidence?

The hand-curated Golden presents Wikipedia-like articles with specific, traditional citations as popups. And in the left hand column are tables of sources, related companies, and other resources. The Wordtune writing assistant is an impressive service (from Israeli startup AI21 Labs); their Spices menu suggests writers add definitions, explanations, counterarguments, or historical facts. Also, jokes about citing sources! Currently when Wordtune states a fact, it points to the specific page supporting that statement, which is preferable to a general link.

Wishlist item #2: Spices pointing to a specific supporting statement, not a page, when applicable.

Generative AI might produce evidence-based misinformation ~ incomplete evidence telling a partial truth. But humans are perfectly capable of doing the same. Here‘s hoping we can make each other better.

Precise truth is paramount. Every citation counts. Misstatements of basic facts might not be hugely controversial, but acceptance of inaccuracies is a slippery slope to urban myths, pseudoscience, or worse. We suspect misinformation travels around the world while the truth is putting its boots on.

Wishlist item #3: More high-end, human fact-checking.

Humans+AI collaborate on citations: A Meta project

Meta AI says it “has developed the first model capable of automatically scanning hundreds of thousands of citations at once to check whether they truly support the corresponding claims. As a knowledge source for our model, we created a new dataset…. [The model] calls attention to questionable citations, allowing human editors to evaluate the cases most likely to be flawed without having to sift through thousands of properly cited statements. If a citation seems irrelevant, our model will suggest a more applicable source, even pointing to the specific passage that supports the claim.”

Humans+AI The Meta AI retrieval library, Sphere, is open-sourced. The research team also created a Side Editor where humans could collaborate with their model (evidently unavailable). “In particular, we will present you with passages and a highlighted claim from a recent version of Wikipedia. Accompanying the claim is a pair of two possible citations that may support it. You will be asked which of the citations is a better source to support the given claim, if any.”

Facts are just the beginning. Meaningful citations require that authors recognize the type of claim they are making, and reference suitable evidence. Now that we have explored state of the art for factual claims by generalized AI, our next post will look at two more complex types of claims and how AI might help people find supporting information. We’ll look at how evidence/support should be provided for: Statements of Judgment/Value, a ‘proposition of value’ that judges morality or merit (‘Ambush is a terrible movie’). And Propositions of Policy, statements urging that a particular action be taken (‘governments should cover all medical vaccination costs’).