1. Biased instructor response → Students shut out

Definitely not awesome. Stanford’s Center for Education Policy Analysis reports Bias in Online Classes: Evidence from a Field Experiment. “We find that instructors are 94% more likely to respond to forum posts by white male students. In contrast, we do not find general evidence of biases in student responses…. We discuss the implications of our findings for our understanding of social identity dynamics in classrooms and the design of equitable online learning environments.”

One simple solution – sometimes deployed for decision debiasing – is to make interactions anonymous. However, applying nudge concepts, a “more sophisticated approach would be to structure online environments that guide instructors to engage with students in more equitable ways (e.g., dashboards that provide real-time feedback on the characteristics of their course engagement).”

Hey, data people: Get free tools for guiding the Data‑to‑Decision workflow. Learn methods, find new insights, and collaborate with colleagues.

Ugly Research has your back.

2. Wrong data cut → So what?

After the recent debacle with success theater at GE, it’s a good time to revisit how we present evidence to a business audience. Domo’s Brent Dykes (@analyticshero) describes three traps to avoid in data storytelling, naming these the data cameo, the data cut, and data decoration. Analysts guilty of placing data in a cameo role have a predetermined agenda, and cherry-pick only those data supporting it (foolish vanity metrics at best, career-limiting and unethical behavior at worst).

In the second, “once a particular cut of the data yields an interesting insight, nothing is done to then package it up for others…. Unfortunately, like an unedited director’s cut, the data cut leans too heavily on the persuasiveness of the raw facts” and the audience fails to appreciate the significance. People falling into the decoration/eye-candy trap make a pretty data visualization, but don’t emphasize key takeaways and inevitably fail to present something meaningful.

P.S. Speaking of ethics, a Wired story asks if there should be a Hippocratic Oath for data scientists.

3. Leaky tech pipeline → Lack of diversity

By now, the lack of diversity in tech is well documented; it’s not just a question of fairness, it’s also about creating the most value by building the strongest possible workforce. What’s less obvious is how to make diversity happen. Oakland’s Kapor Center for Social Impact (@KaporCenter) is addressing the problem with the LeakyTechPipeline.com initiative: “We are excited to release our new report & website that details the complexity of tech’s diversity problem, provides a framework to understand why the lack of diversity in tech, & provides a blueprint for comprehensive solutions.” The site features research evidence on diversity issues and possible solutions. #LeakyTechPipeline

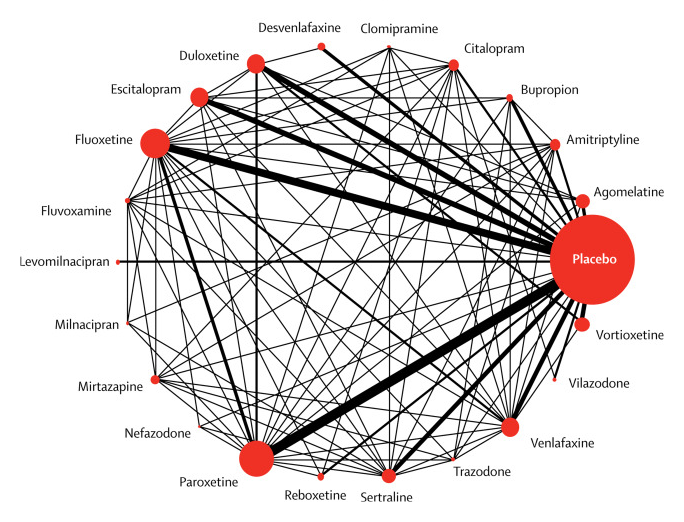

4. Prescribe antidepressants → Treat major depression

An impressive network meta-analysis – comparing drug effects across numerous studies – shows “All antidepressants were more efficacious than placebo in adults with major depressive disorder. Smaller differences between active drugs were found when placebo-controlled trials were included in the analysis…. These results should serve evidence-based practice and inform patients, physicians, guideline developers, and policy makers on the relative merits of the different antidepressants.” Findings are in the Lancet.

Quick insights.

Posted by Tracy Allison Altman on