How we decide is no less important than the data we use to decide. People are recognizing this and creating innovative ways to blend what, why, and how into decision processes.

1. Apply behavioral science → Less cognitive bias

McKinsey experts offer excellent insight into Behavioral science in business: Nudging, debiasing, and managing the irrational mind. Their recent podcast explains how to apply behavioral science to performance management, hiring, and other key functions. “With nudges — subtle interventions based on insights from psychology and economics — we can influence people’s behavior without restricting it.”

To improve diversity efforts, companies are attempting to minimize unconscious bias. “It starts with recruiting processes, behavioral design of how to make them function in a way that doesn’t favor those —we call it a ‘mini me’ bias— who have always been recruited to the company before and would be recruited all the time again.” To ensure diverse leadership in the future, “you have to be aware of those little biases and fight them….”

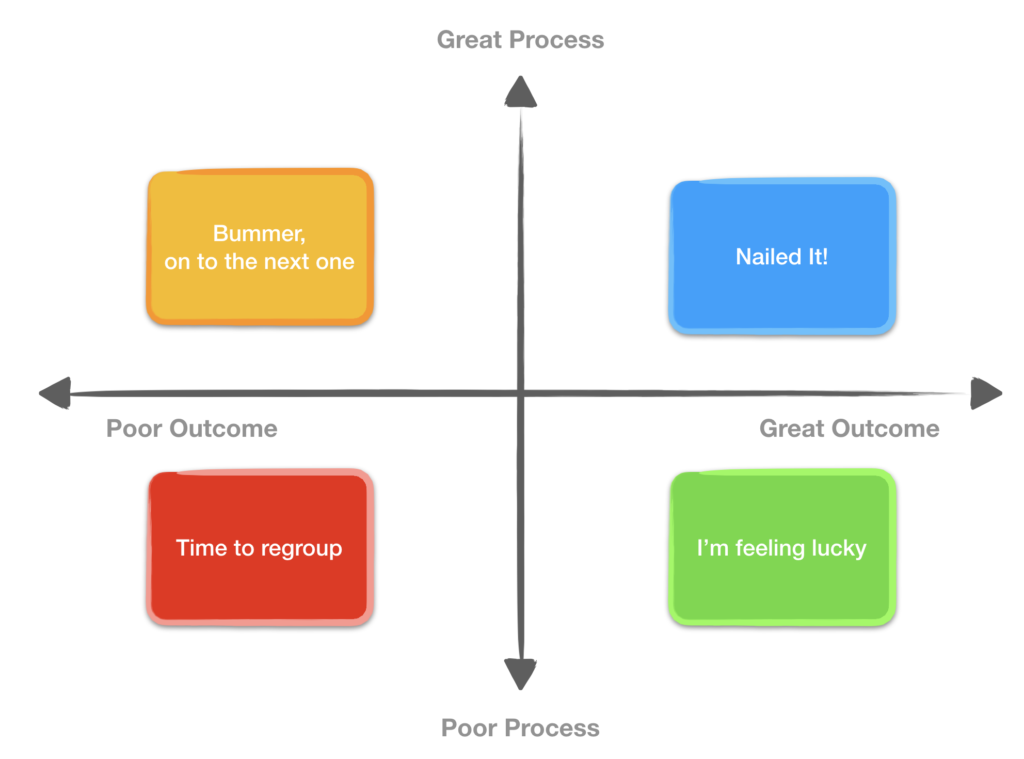

Source: Tomasz Tunguz.

2. Quality decision process → Predictable outcomes

After the Golden Rule, perhaps the most important management lesson is learning to evaluate the quality of a decision process separately from the outcome. Tomasz Tunguz (@ttunguz) reminds us in a great post about Annie Duke, a professional poker player: “Don’t be so hard on yourself when things go badly and don’t be so proud of yourself when they go well…. The wisdom in Duke’s advice is to focus on the process, because eventually the right process will lead to great outcomes.”

3. Reinvent the meeting → Better decisions

Step back and examine your meeting style. Are you giving all the evidence a voice, or relying on the same old presentation theater? Kahlil Smith writes in strategy+business “If I catch myself agreeing with everything a dominant, charismatic person is saying in a meeting, then I will privately ask a third person (not the presenter or the loudest person) to repeat the information, shortly after the meeting, to see if I still agree.” Other techniques include submitting ideas anonymously, considering multiple solutions and scenarios, and a decision pre-mortem with a diverse group of thinkers. More in Why Our Brains Fall for False Expertise, and How to Stop It.

4. Asking good questions → Productive data science

Gage Peake at Decisive Data describes the importance of starting with a good question, and understanding which type of question is being asked. In Four types of questions for data scientists, she gives examples of exploratory, inferential, predictive, and causal questions. For instance, how are variables distributed? Or how can we predict sales moving forward?

5. Go beyond the journal → Better medical decisions

Redefining the ‘e’ in evidence-based medicine: Evidence from science journals is insufficient for many medical decisions. A BMJ column suggests that evidence-based medicine should shift away from heavy reliance on journal articles. “Evidence has accumulated across a spectrum of different interventions that journal publications cannot be trusted.” Reporting bias is a growing threat. Plus, “below every 10-page trial report lies a far deeper and more complex web of data and information needing attention.” Via @carlheneghan.

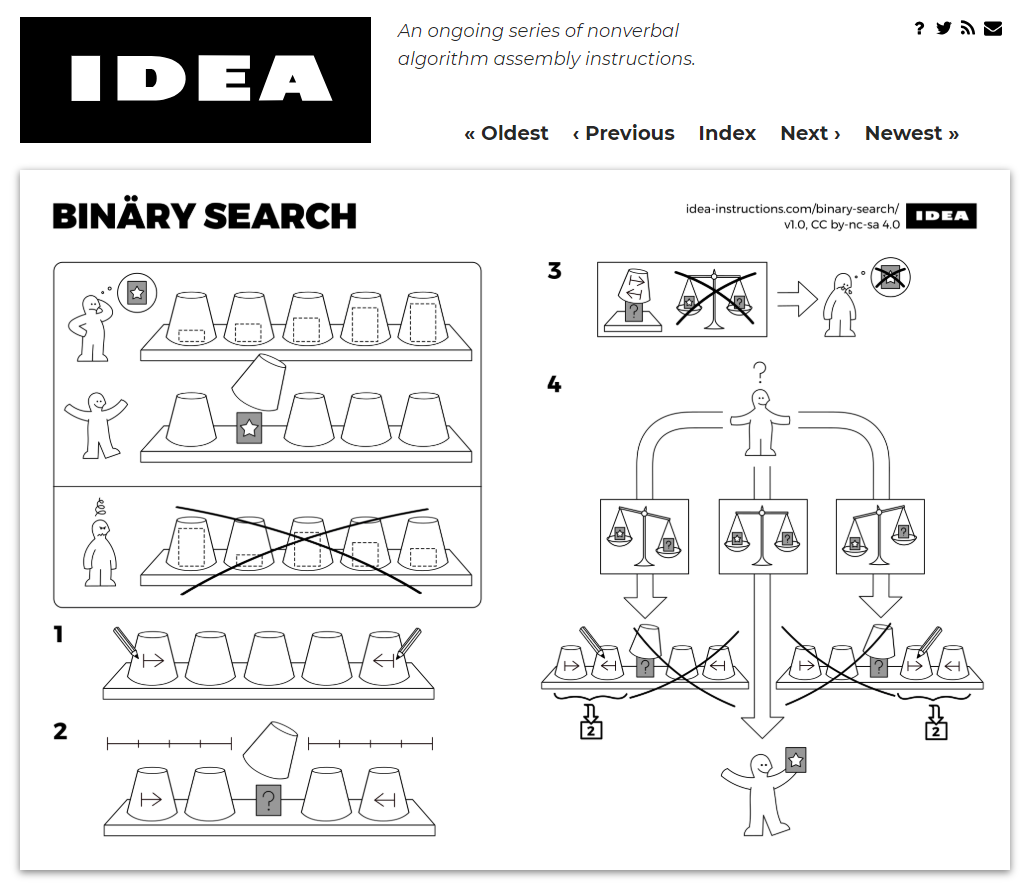

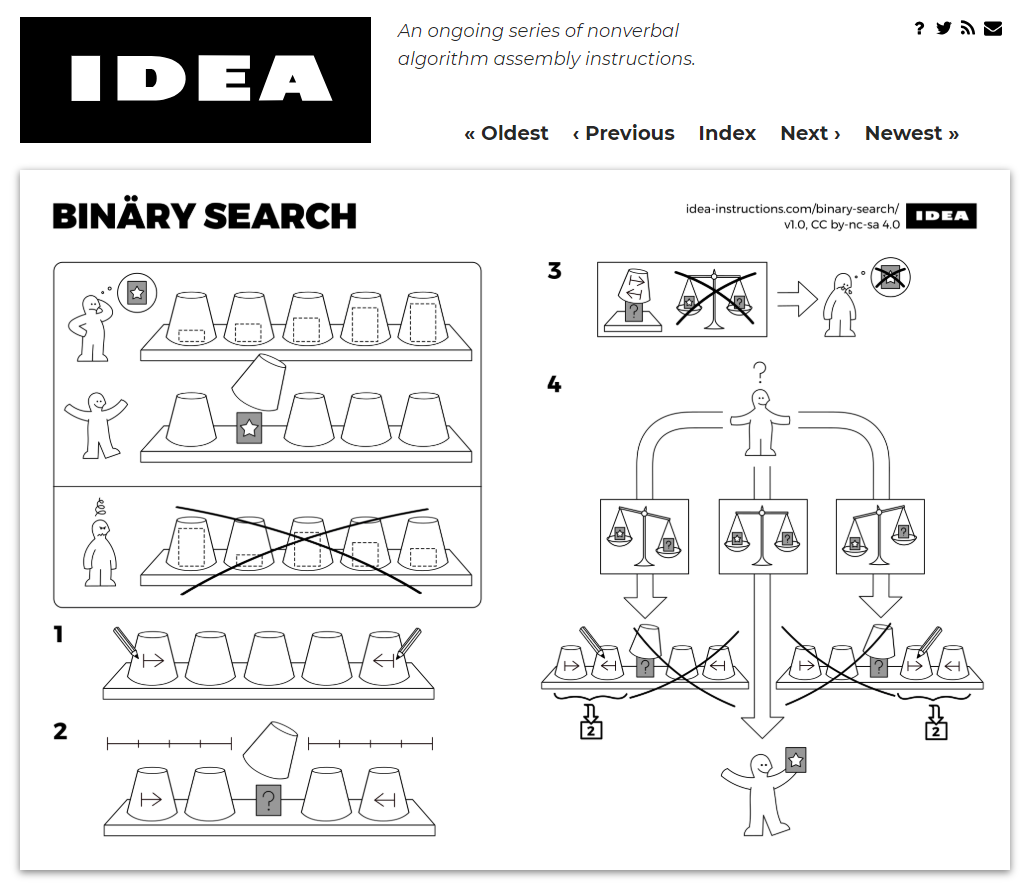

6. Visual, nonverbal explanation → Global audience

Remember the fun you had building that BILLY bookcase, possibly while eating Swedish meatballs? The IDEA project creates nonverbal algorithm assembly instructions, such as this one for binary search. Clever way to walk people through fundamental concepts.

Quick insights.

– More transparency, reproducibility needed for ML development. Pete Warden (@petewarden) explains the reproducibility crisis in machine learning. It’s so bad that developers sometimes can’t train their own models and get similar results. “[M]achine learning is by far the worst environment I’ve ever found for collaborating and keeping track of changes.”

– What is the best way to communicate odds ratios? Stephanie Evergreen (@evergreendata) offers useful examples. “Things like ‘5.8 greater odds’ [lack] practical meaning to most of us. We had to work hard to balance out what is digestible to the public and most precise to the scientists responsible for data collection and analysis.” Evergreen prefers framing it in terms of likelihood, using language such as “5.8 times more likely.”

Posted by Tracy Allison Altman on