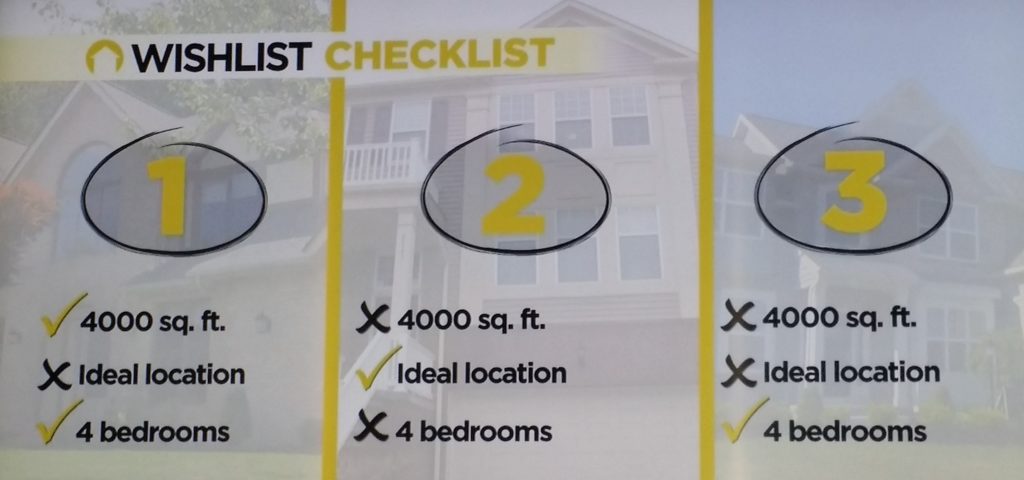

House Hunters International is great guilty-pleasure viewing, especially while nursing a cold or avoiding the plague. (Pro: Insider views of interesting cities. Con: Reminders of the unique pain of choosing a place to live.) It’s easy to add city center, natural light, and extra bedrooms to your wish list, but painful to accept the inevitable trade-offs when searching for homes within budget.*

Real estate apps aren’t so helpful as decision time approaches: Algorithms struggle to recognize the nuances of home design and personal preferences. Early searches can save a ton of time, but your results are only as good as your input. This is why human-in-the-loop AI is like House Hunters.

What is human-in-the-loop AI?

Sexy stories about artificial intelligence show the rise of belligerent or awesome machines taking over our world. Automation is also a popular use case IRL: Things like customer service, underwriting, and credit decisions.

However, the most interesting AI is still emerging, where people work alongside algorithms. Typically this segment is called human-AI collaboration, augmented intelligence, or human-in-the-loop AI. In the consumer space, no moniker is really necessary: It’s just you or me, interacting with an app that helps us decide which size t-shirt to order. In business applications, rather than allowing a black-box algorithm to decide independently, augmented AI makes people aware of the technology’s design and capabilities, and accepts their input. Humans have varying levels of control over decision rules, input data, and resulting outcomes.

Which brings us to my House Hunters analogy. A home search is most productive when people collaborate with a real estate app to define and refine what they’re looking for. People establish decision rules (‘within 10 miles of I-95’), or provide photos of homes they like (‘that Craftsman on 34th Ave’). The AI recommends and the buyer responds. This is where a glimpse into the black box can substantially build trust, improve our user experience, and create better results. Simple example: An app suggesting a “pretty good” or 90% match, such as a home two blocks outside your desired geography.

How big is your loop?

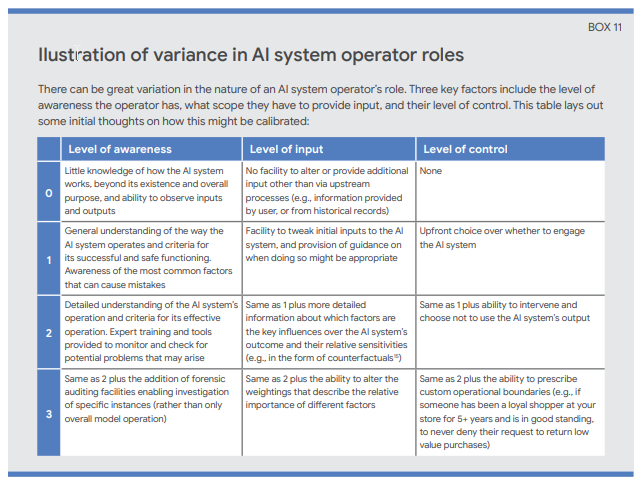

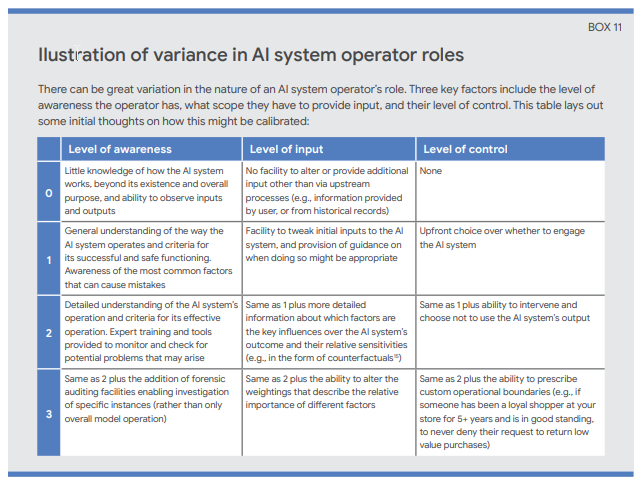

I’m an AI system operator? Sometimes human-in-the-loop AI means tech-savvy analysts engaging with, or training, a complex model. The machine might be learning at a high rate of speed, and closing a lot of loops. In a must-read 2019 white paper, Google describes scenarios where people collaborate with AI, reflecting increasing levels of human awareness, input, and control. It’s all laid out in tabular form (Box 11, see below).**

At the risk of sounding nitpicky, it bugs me that the illustration is from the perspective of an ‘AI System Operator’. How many of us refer to ourselves that way? Nevertheless, people doing the interacting seem to range from unaware end users to managers with decision-making responsibility, which sounds about right.

Elsewhere in the section on Human-AI collaboration, Google shares insight into thinking about permissions to tweak (or distance yourself from?) an algorithm.“To what extent can a decision be contested? In practice, people tend to be far less concerned about the process used to reach a decision if there is an option of meaningful human review.” So very true.

This framework is an important starting point, but it’s light on portraying situations where these activities might occur. It’s up to us to connect human-AI collaboration with typical business or consumer environments.

Some loops are bigger than others. Executives and subject matter experts often review algorithm designs or human assumptions and give feedback to improve predictions or recommendations. Insurance underwriting comes to mind. This is what I call closing the big loop: Gradual learning and refinement to generate useful results.

One final note.

Some may recall my earlier post comparing MCDA (Multiple-Criteria Decision Making) to House Hunters. Weighing competing values at work really is a lot like negotiating with a life partner about your next home.* MCDA is a systematic way to sort through values and priorities when substantial data is available. Which won’t make giving up that 4th bedroom any less painful.

*Kidding aside, I’m very fortunate to live in a lovely home. When many people don’t even have enough to eat, please give to the food bank in your city.

**Google, 5-Feb-2019. Perspectives on Issues in AI Governance. Figure: Box 11, Illustration of variance in AI system operator roles, p. 23.

Posted by Tracy Allison Altman on 11 August 2020.