1. Machines Gone Wild → Digital trust gap

Last year I spoke with the CEO of a smallish healthcare firm. He had not embraced sophisticated analytics or machine-made decision making, with no comfort level for ‘what information he could believe’. He did, however, trust the CFO’s recommendations. Evidently, these sentiments are widely shared. — Tracy

A new KPMG report reveals a substantial digital trust gap inside organizations: “Just 35% of IT decision-makers have a high level of trust in their organization’s analytics”.

Blended decisions by human and machine are forcing managers to ask Who is responsible when analytics go wrong? Of surveyed executives, 19% said the CIO, 13% said the Chief Data Officer, and 7% said C-level executive decision makers. “Our survey of senior executives is telling us that there is a tendency to absolve the core business for decisions made with machines,” said Brad Fisher, US Data & Analytics Leader with KPMG in the US. “This is understandable given technology’s legacy as a support service…. However, it’s our view that many IT professionals do not have the domain knowledge or the overall capacity required to ensure trust in D&A [data and analytics]. We believe the responsibility lies with the C-suite…. The governance of machines must become a core part of governance for the whole organization.”

More evidence. The stellar Machine Learnings newsletter offers two recent examples: In Moving from a Default Trust to Default Skeptic Society, Michael Dempsey observes that today humans largely trust what they hear/see, but tomorrow they will not. Bad Bots and Deep Fakes won’t go away anytime soon. Meanwhile, more algorithm bias is being discovered. Li Zhou explains in Politico that “Google’s Translate tool ‘learns’ language from an existing corpus of writing, and the writing often includes cultural patterns regarding how men and women are described.” Because the model is trained on biased data, it produces biased results, such as routinely making nurses female and doctors male. (Ed. note: When is the training data not to blame? When humans decide to use biased data sets?)

Hey, data people: Get free tools for guiding the Data‑to‑Decision workflow. Learn methods, find new insights, and collaborate with colleagues.

Ugly Research has your back.

2. Microlearning for Data Science → More overload?

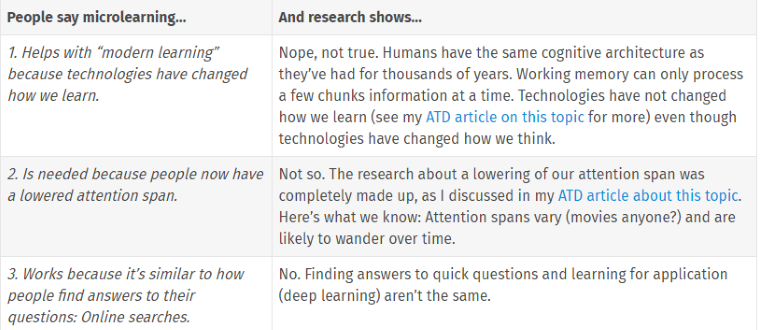

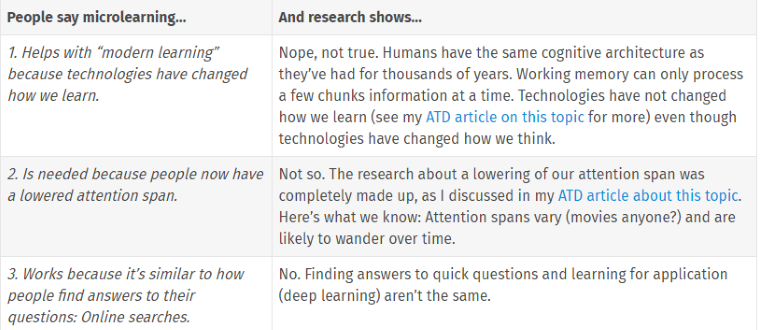

Educating enough data scientists is a pain point for many institutions. Designing effective training is particularly difficult because the content is complex, and unicorn subject matter experts are typically too busy to develop materials. Enter microlearning as a possible answer. Patti Shank, PhD and learning expert (@pattishank), recaps What Research Has to Say About Microlearning and Macrolearning. She quickly gets to the heart of the matter, explaining What’s True. What’s Not. And What’s Nonsense.

Microlearning isn’t new. “It’s a repackaging of previous learning ideas, explains Donald Clark, writer, vocal critic on silliness in workplace learning, and former CEO. You’ve likely heard of chunking, learning nuggets, and learning objects. Chunking, for example, is a strategy we use to better work within the constraints of working memory.“ Regardless of the moniker, small (or micro, or nano) learning units must “include critical instructional elements such as practice, feedback, and reflection.” Otherwise you run the risk of ineffective training and information overload.

Data science training in particular centers around practice. Shank says “One of the differences between some of what people are calling microlearning and appropriate chunking is that good chunking shows the organization of the topic, which supports mental processes and helps people build usable knowledge…. Disconnected chunks make these critical outcomes harder. Microlearning elements can seem disconnected, so they don’t help people understand how different topics connect.” No matter the design, none of this has to be formal. Data scientists tend to learn hard things on their own, and from others.

Related: Why it’s so hard to train data scientists. Great discussion (see the comments).

Quick insights.

Neural network AI is simple. So stop pretending you are a genius. Funny rant by Brandon Wirtz about people who implement code that could fit on a t-shirt. “So you trained a neural network to do something no human has been able to do… like detect if people are gay just from a photo. No. No you didn’t. Neural networks are dumb black box systems.”

Not Awful Training for Statistics. Jessica Hartnett (@Notawful) has a refreshing, humorous approach.

Posted by Tracy Allison Altman on

Photo credit: Brett Jordan on Unsplash.