I’ll be at VentureBeat’s Transform AI conference July 10-11 in San Francisco. Let me know if you’re attending; would be great to meet. -Tracy

It’s not always easy staying on the AI bandwagon. Claims of algorithmic bias abound and (mis)applications threaten people’s trust. Not everyone wants their face recognized or their driver’s license scanned.

Developers must shine a light in the black box, and show how people might collaborate with AI on complex decision-making. Many want to require so-called explainable AI, but that’s a convoluted ask: Does transparent mean we can see lines of code, or see why a particular decision was made? Zerilli et al. make an intriguing case in Transparency in Algorithmic and Human Decision-Making: Is There a Double Standard?, concluding that “Both humans and algorithms are better off explaining why and intention, not technical details.” (I wrote about explaining intention/action here.)

People want experiential. Why not apply it to AI learning?

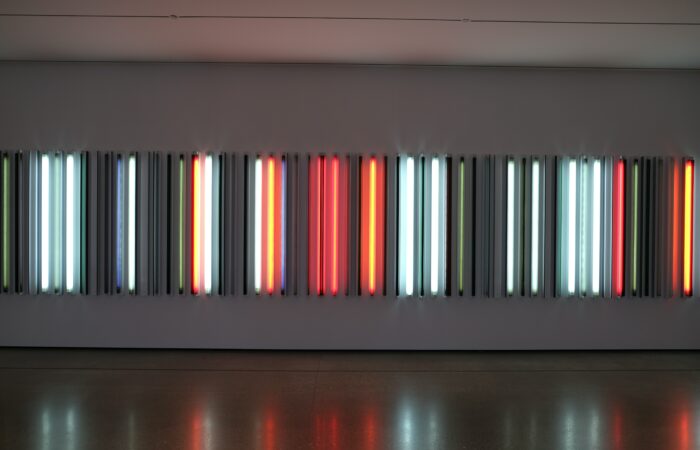

How can we help people understand the conclusions drawn and actions recommended by AI – without getting swamped in minutiae? I like to think I have a practical solution: Let people work alongside AI on challenges. We know that experiential events / immersive installations are in high-demand: Everything from Sleep No More to 29 Rooms to Meow Wolf.

So I’m proud to introduce the Museum of AI, combining immersive theater, art installations, puzzles, and hands-on experiential learning about AI. We have assembled a great team to bring real-world case studies to life in an escape-room environment: Rethinking experiential to shine a light on enterprise AI. It would be great to hear your thoughts.

Posted by Tracy Allison Altman on 8 July 2019.