Since my work is about humans+AI deciding together, I attended DAAG 2019 in beautiful downtown Denver, exploring the “intersection of decision analysis and data science to take decision-making to the next level.” The intent was for decision analysts to better understand data science and “support data-centric decision-making” while data scientists could better “guide the use of their data.”

Why do DA and DS roles seem so far apart? Where does decision science fit in? Decision analysis focuses on big, strategic decisions and is closely aligned with portfolio management in pharma and energy: It’s about evaluating and facilitating decisions being made by others. The potentially embarrassing outcome is screwing up a $1B+ decision. DA applies concepts like decision trees, influence diagrams, and value of information. DAAG is often championed by Stanford alumni in risk analysis/operations research and is sponsored by the Society of Decision Professionals.

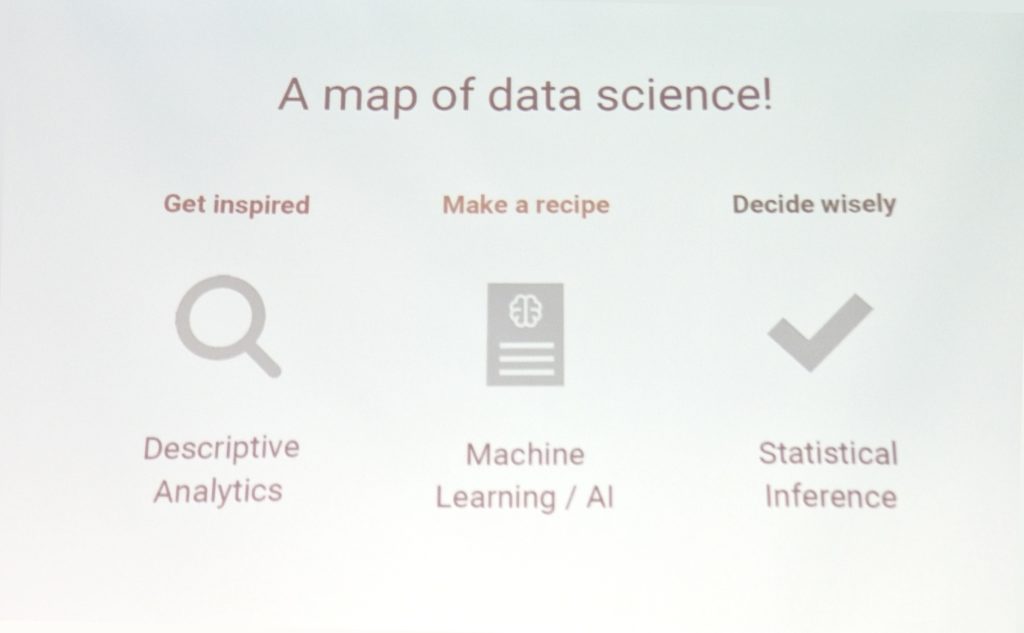

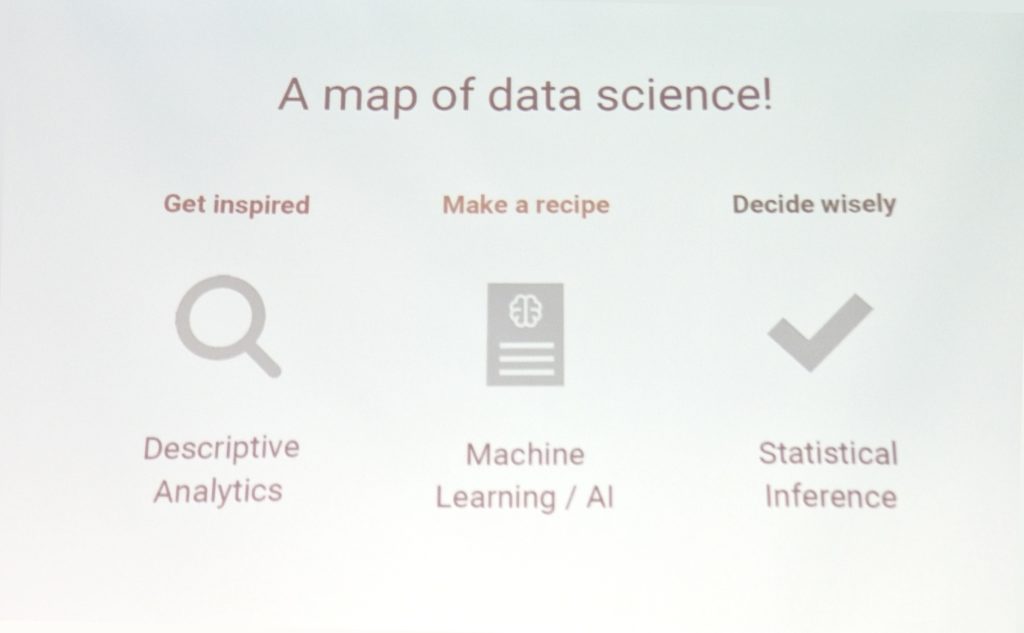

Contrast DA with the wild-west atmosphere of data science. Algorithm development focuses on smaller decisions at scale, so the potentially embarrassing outcome is screwing up billions of decisions. DAAG panelists talked about the hazards of bias, low-quality training data, and overly complex models. (I would have enjoyed seeing an anonymized case study.) Decision and behavioral science received little focus, with one notable exception: Cassie Kozyrkov (@quaesita), Chief Decision Scientist at Google Cloud, gave the keynote. Loved her closing statement: It’s not the “genie that’s dangerous, it’s the unskilled wisher”.

I’d like to see all this converge as decision management: What are we trying to decide, and how will we decide? Jim Driscoll, head of Intel’s Decision Quality program, described their efforts to embrace AI.

Some participants wondered whether DA will be overrun by AI. Stanford’s Isaac Faber said a better question is how did DA miss business intelligence? Comparing the adoption of Tableau dashboards to traditional DA presentations, he said “the votes are in”. But I see four opportunities:

1. Know what you don’t know: Shine a light on uncertainty. Highlighting distinctions betwen DA and DS methods, one participant claimed “Uncertainty isn’t in the database.” Data scientists responded why, yes, it is: We can evaluate a data set and characterize the question marks. Think of this as “little u” or lowercase uncertainty. Data science can add value by converting a &@%-ton of data into a set of known unknowns that help us make better decisions.

But the head of pricing for Google Cloud Platform, Thomas Seyller, said in their dynamic market “We’re making decisions today we couldn’t have foreseen six months ago.” And that’s because “Big U”, uppercase uncertainty is *not* in the database (not that we can see, anyway). These Rumsefeldian unknown unknowns, the things we don’t know we don’t know, tend to slap us upside the head. After encountering an unk-unk, we must adjust our thinking before returning to our comfort zone.

After encountering an unk-unk, we must adjust our thinking before returning to our comfort zone.

2. Know when to stop: Value of information. We keep learning more and more about less and less. DS projects are at risk of running amok with little incremental benefit. Microsoft’s John Mark Agosta said ‘accuracy addiction’ is a widespread problem. DA’s use of value of information concepts could be the antidote.

3. Know how to bring people together: Follow a studio model. One presenter is leading what I envision as DA+DS, deciding together. Julie Schiffman, analytics VP at Pfizer, described their emulation of a music studio, where creators collaborate. To overcome conventional PowerPoint Theater, Pfizer created an analytics studio where decision makers and data analysts can test ideas and predict outcomes in real time. This was the best example I saw of true collaboration, building trust and understanding between disparate groups. Unfortunately her concept was only mentioned in a pharma track event, so the entire group didn’t learn about it.

4. Know how to explain. The more things change, the more they stay the same. Unfortunate AI behavior has triggered demands for accountability, explainable AI, and AI audits – and posing huge challenges. Meanwhile, SDP long ago saw the need for a human code of DA conduct. Even for insiders it can be tough to discern or explain how decisions are made, but people in DA and DS must add transparency and build trust.

DAAG 2020 will be led by Pat Leach, who wrote Why Can’t You Just Give Me the Number? Fantastic book title.

Posted by Tracy Allison Altman on 30 April 2019.

Photo credits: Lubo Minar on Unsplash, and bantersnaps on Unsplash.