Jerry Seinfeld was wrong when he claimed public speaking is our #1 fear. I’m pretty sure we’re more afraid of having our decisions scrutinized. Adding to the fun, now algorithmic decisions are under pressure too.

It is rather painful to have decisions second-guessed before the numbers come in, and even worse if things go pear-shaped. Leadership behavior requires overcoming fear, looking people in the eye, and explaining. But we’re discovering barriers that prevent successful people – and the algorithms they create – from making cringe-free decisions. Ways around this:

Explain why, not how.

A good decider must persuasively explain to friends and frenemies alike. But we aren’t always in a position to reveal our rationale: How much transparency is realistic given privacy, political, or proprietary concerns?

Human example: Ideally, decision makers should identify the inferences they’re making and show how available evidence is being weighted. But that can’t happen if decision methods are crucial to maintaining competitive advantage. In that case, people should loosely describe their methods and make the conversation about why, not how.

Machine example: It’s not easy being AI. Critics are scrutinizing how algorithms make decisions – and rightly so. But explainable AI is fraught with issues, as Cassie Kozyrkov aptly describes.

Zerilli et al. make an intriguing case in Transparency in Algorithmic and Human Decision-Making: Is There a Double Standard? “We worry that automated decision-making is being held to an unrealistically high standard, possibly owing to an unrealistically high estimate of the degree of transparency attainable from human decision-makers…. [W]e review evidence demonstrating that much human decision-making is fraught with transparency problems, show in what respects AI fares little worse or better and argue that at least some regulatory proposals for explainable AI could end up setting the bar higher than is necessary or indeed helpful.”

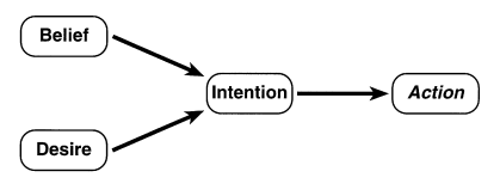

Both humans and algorithms are better off explaining why and intention, not technical details. The authors “argue that since the justification of action for human purposes takes the form of intentional stance explanation, the justification of algorithmic decisions should take the same form. In practice, this means that the sorts of explanations for algorithmic decisions that are analogous to intentional stance explanations should be preferred over ones that aim at the architectural innards of a decision tool.”

“If you can’t explain it simply, you don’t understand it well enough.” — Albert Einstein

It will run you about $30 US, but for a philosophical deep dive into connections between decision science, AI, and explaining, the Zerilli paper is a must-read.

Recognize your biases.

Oftentimes we don’t recognize what influences our own reasoning, leaving decisions open to criticism.

Human example: Negative experiences can prompt us into overly cautious decision-making. Surely everyone has made a conservative choice to avoid embarrassing results and prevent downside risk, possibly missing out on a big upside – things like passing on Bitcoin in 2012 (!). Evidence shows we really dislike losses: As explained by the excellent Decision Lab, Tversky and Kahneman described the concept of loss aversion, a cognitive bias with potentially harmful consequences.

Machine example: Machine learning projects are developed using training data. Functional capability depends on the completeness and quality of those data sets. Here, human error (choosing crummy training data) causes technical bias. A model trained to recognize financial fraud might catch only the events fitting an outdated pattern of fraud. And a hiring tool trained on tech resumes from Russian Hill residents might not recognize qualified candidates from elsewhere.

Nothing is harder than building awareness of your own biased decision making. Try cognitive simplification for strategy or group behavioral techniques for decision makers. When developing technology, consider discrimination detection and algorithm audits, a checklist for biased training data, or IBM’s Fairness 360 Kit.

Embrace the scrutiny: How to stay cringe-free and confident.

In some environments, it’s taboo to discuss or question management decision making. Hesitating to shine a light on processes erodes trust and misses the opportunity to teach by example. Better to:

– Engage stakeholders in a conversation about what should count as a valid explanation, and what types of evidence are required to support it.

– Encourage people to evaluate and buy into decision processes separately from the outcomes. Invite them to join you on a path toward decision-making maturity. Focus on how to improve the process given new evidence.

Posted by Tracy Allison Altman on 5 February 2019.

Photo Credit: Photo by Kane Reinholdtsen on Unsplash