1. Vigilance → Better algorithms

“Eliminating bias… requires constant vigilance on the part of not only data scientists but up and down the corporate ranks.” In an insightful Information Week commentary, James Kobielus (@jameskobielus) considers the importance of Debiasing Our Statistical Algorithms Down to Their Roots.

“Rest assured that AI, machine learning (ML), and other statistical algorithms are not inherently biased against the human race. Whatever their limitations, most have a bias in favor of action. Algorithms tend to make decisions faster than human decision makers, do it more efficiently and consistently, consider far more relevant data and variables, and leave a more comprehensive audit trail. For their part, human decision makers often have their own biases, such as giving undue weight to their personal experiences, basing decisions on comfortable but unwarranted assumptions, and only considering data that supports preconceived beliefs.”

DATA FOR DECIDING: As everyone rushes to debias, Kobielus cautions against overstating the importance of bias reduction, “only one of the outcomes that algorithm-driven decision-making processes are designed to promote. Algorithmic processes may also need to ensure that other outcomes — such as boosting speed, efficiency, throughput, profitability, quality, and the like — are achieved as well, without unduly compromising fairness. Trade-offs among conflicting outcomes must always be addressed openly.”

2. Privileged evidence class → Weaker decisions

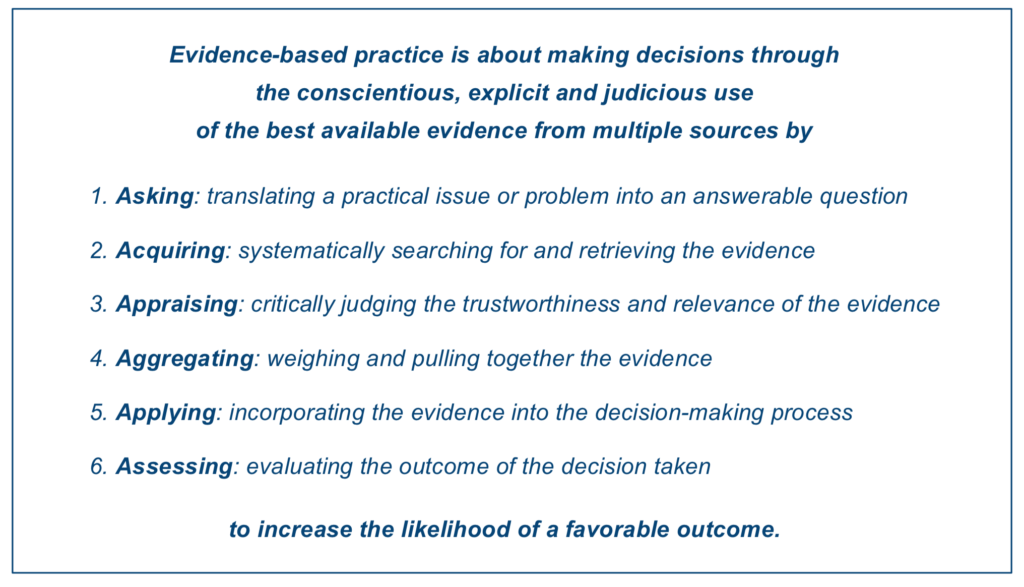

It’s easy to commit to evidence-based management in principle, but not so easy to define what is the ‘best available evidence’ – and then apply it to a high-quality decision. Edward Walker writes on LinkedIn that “Being evidence-based does not mean privileging one class of evidence over all others. Regardless of its source, all evidence that is judged to be trustworthy and relevant, should be considered as part of the decision-making process.”

DATA FOR DECIDING: Here’s a cautionary tale against relying too heavily on a set of KPIs, or a standardized dashboard – unless you’re automating routine and repeatable operational decisions, using routine and repeatably reliable data. Most management actions require considering fuzzy evidence and following nuanced methods to ensure an unbiased decision. The development of evidence-based management processes compares to the early development of evidence-based medicine: Hierarchies of evidence (Level 1, A-B-C, etc.) were established to weed out low-quality findings, but it wasn’t long before we had 1a, 1b, 1c and so forth.

Hierarchies, metrics, and similar simplifying mechanisms are valuable unless weaponized, to the exclusion of experience, logic, and ideas. Weighing evidence quality is never as simple as identifying how it was collected (such as randomized trial, observation, survey); decision makers, even non-experts, must understand the basics of available analytical methods to fully evaluate the explanatory value of findings.

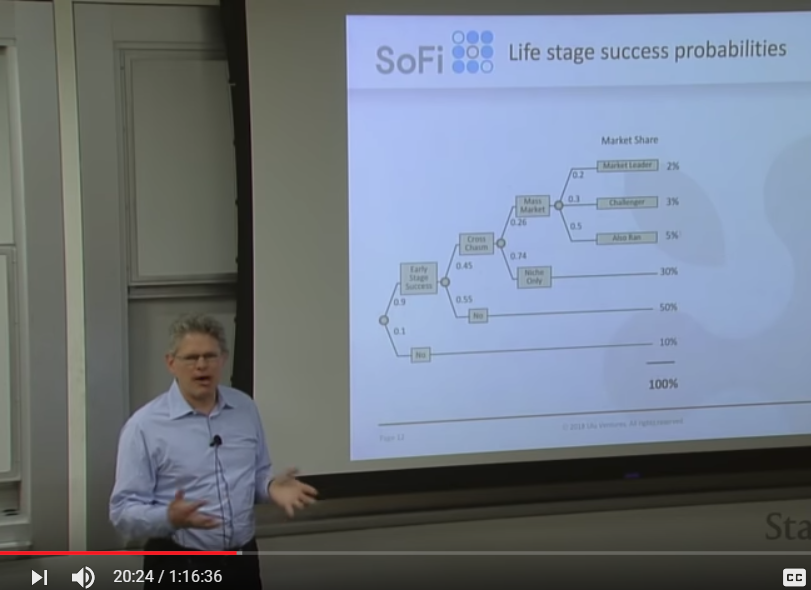

3. Decision Analysis → VC rigor

How to apply systematic workflows to venture capital decisions. Clint Korver from Ulu Ventures presented to Stanford’s class on professional decision analysis. Thanks to Somik Raha.

4. Private sector $ → Better government outcomes

The Bloomberg American Cities Initiative pledged $42 Million to @WhatWorksCities, intending to help City Halls use data to improve decision making.

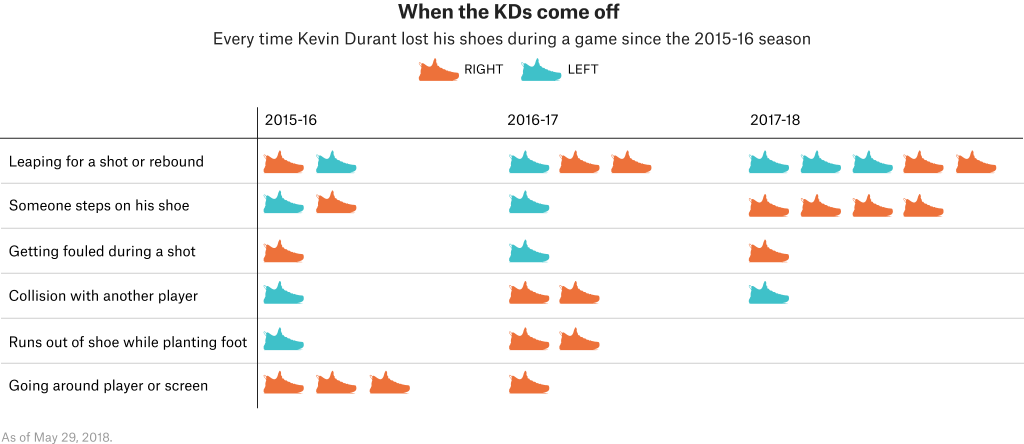

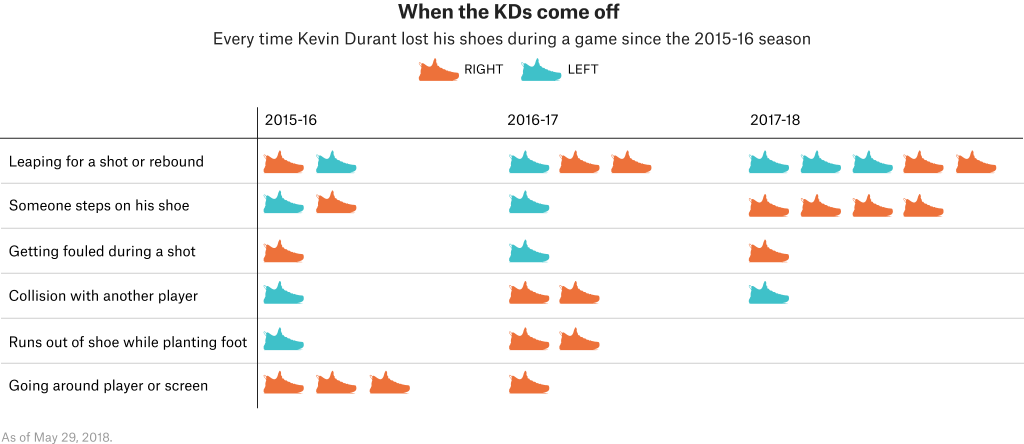

Quick insight (a/k/a obligatory Golden State Warriors mention): Chris Herring (@herring_nba) at Five Thirty Eight ponders why Kevin Durant loses his shoes so often.

Posted by Tracy Allison Altman on

Photo by NATHAN LAM on Unsplash.