To cut through AI complexity, focus on decisions.

Never has it been more important to effectively explain complex concepts. Technology is influencing most decision processes, not always transparently so. On the bumpy road toward explainable AI (XAI), we find great communication options, from printed materials to state-of-the-art experiences. But where do you start, or know when you’re finished? How do you describe technology to people with varying levels of knowledge/interest? Focus on the decision(s) being made.

Experts weigh in on XAI from numerous perspectives: previously I wrote about a wonderful philosophical analysis centered around Dennett’s intentional stance. That logic is consistent with my method of framing conversations around the actions being influenced by the technology. In this post, I show how to achieve the dual purpose of creating engaging content and satisfying learning objectives.

Envision a human-in-the-loop future.

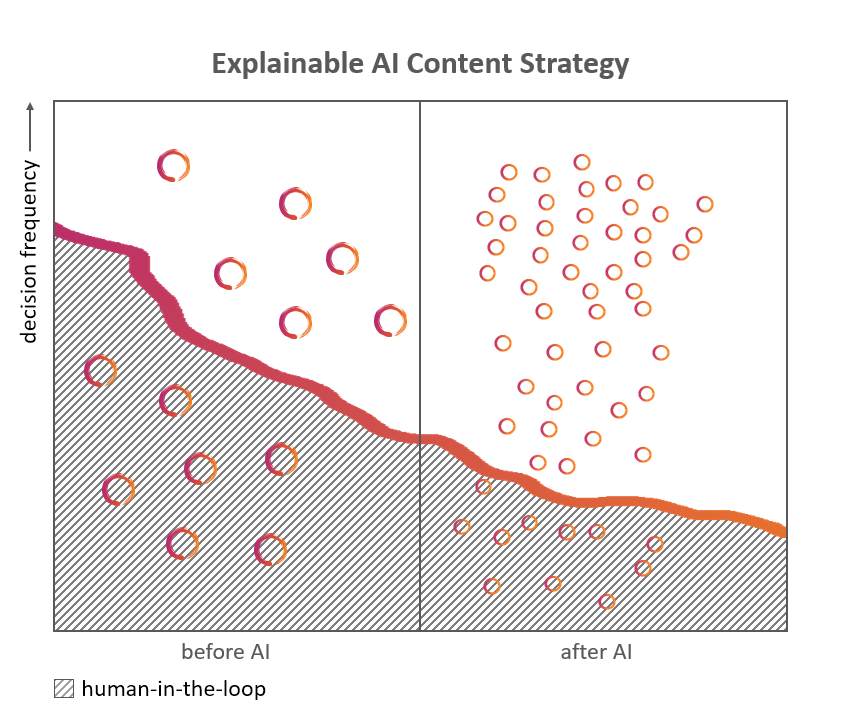

Emphasize before & after decisions to explain technology’s potential role in important processes. Rather than describe nuts and bolts, articulate how decision-making will evolve in the human-in-the-loop, AI-informed future. Use concrete language that’s meaningful to the audience – your executive team, a user group, a client, or the public.

Before: What decisions are being made now, and how (status quo before AI)? Which are machine-automated or machine-augmented?

After: How will key decisions differ after AI adoption? Does the frequency change? Which decisions are made by AI autonomously? Which continue to be made by humans? How will humans approve or collaborate on decisions alongside AI?

Quality, quality, quality. In both cases, address how decision quality will be evaluated and sustained. Establish characteristics of a ’good’ AI decision and those of a ’good’ human-led one.

Example 1: Insurance underwriting.

| before AI | after AI |

| human-led / underwriter policy pricing | AI-informed, human-led pricing decisions |

| policy-writing opportunities | similar decision frequency |

| complex information | more variables as decision criteria |

| quality check: consistency with professional standards, expectations | quality check: consistency with corporate financial targets & ethical guidelines, avoidance of systematic bias |

Example 2: Driver dispatch.

| before AI | after AI |

| taxi dispatcher assigns driver | AI approves passenger request, suggests rideshare driver(s) |

| human-led | granular data, many interim decisions (e.g., choosing a route) |

| quality check: consistency with standard practices | quality check: ‘good’ decisions improve customer experience, avoid red-zoning, and regulatory violations |

Now for the explaining part. Once the before/after scenarios are identified, it’s time to explain specifically how humans and AI will arrive at decisions. This emphasis creates a clear point of view that drives meaningful content design. Options range from walking decision makers through a hands-on experience to presenting concise descriptions and visualizations.

Posted by Tracy Allison Altman on 29 Jan 2020.

Photo credit: Alexander Mueller on Flickr.