As data complexity grows, so does the importance of explaining. The philosophy of science can teach us about the role of explaining in high-quality, evidence-based decisions. It’s not just navel-gazing: An explanation is a statement that makes something clear, or a reason or justification given for an action or belief. It describes “a set of facts which clarifies the causes, context, and consequences of those facts.”

Whether made by man or machine, a high-quality decision first requires explanatory data – and then the decision needs to be explained to stakeholders. Within particular knowledge domains, we build consensus on what counts as valid evidence. This improves quality for routine decisions (assuming people follow the ‘rules’ of evidence). Examples: The Minnesota Multiphasic Personality Inventory (MMPI) in psychology, reporting proven reserves in the petroleum industry; and automated prediction of medical evidence quality.

Good explainers can connect actions with outcomes, focusing activities on adding value. In humans, this capability isn’t always on the hiring radar: More often we look for skills in critical thinking, research methods, or presentation – all, of course, related to explaining. It’s worse with technology, where explanation capability is rarely present or expected.

Should technology be ‘explanation-aware’? Early artificial intelligence – such as expert systems – were expected to explain their decision-making: A 1993 survey showed the intention was there, although explanation capability was limited. Meanwhile, today’s black-box algorithms are scaring the pants off people. Some are calling for explainable AI (XAI) – so people can understand technology’s decision-making. But how would XAI work, particularly for opaque methods like deep learning? Rudina Seseri asks on TechCrunch “What do we want to know? The algorithms or statistical models used? A cause-consequence relationship with human-intelligible concepts?” Plus, there are confidentiality issues around proprietary knowledge.

What is the skill set for explaining? On the human side of the equation, is your hiring process explanation-aware? Things to think about:

Explanatory power. Are you evaluating candidates’ ability to develop evidence with explanatory power, to produce data explaining why rather than what? Manually, or with algorithms?

Examples: Explaining root causes for customer satisfaction, or why a social program failed.

What it’s not: Manipulating data, creating simple visualizations and dashboards, or administering Myers-Briggs.

Process leadership. Is the candidate able to determine what counts as a valid explanation, and what types of supporting evidence are required? Are they able to lead processes reinforcing these concepts? Can they structure explanations in a repeatable way?

Right person in the right role. Are you filling a role where explaining is essential to success? Or are you seeking technical skills for managing data, projects, and reporting?

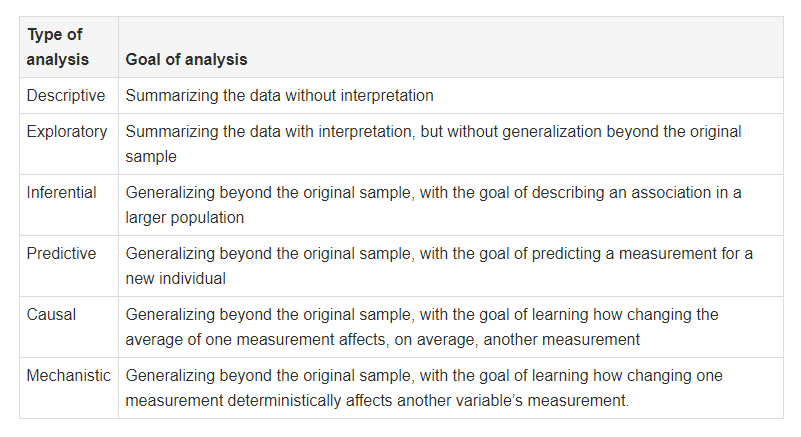

Does A → B? A good explainer recognizes the difference between correlation and causation, and understands observational vs. experimental data. They can explain analysis without inadvertently leading the audience to infer causation.

Source: @jtleek et al. on PeerJ.

XAI translator talent. Can the candidate assess an algorithm’s decision process and explain it to humans? Can they build a bridge between XAI and business leaders?

Prediction. Explaining is often backward-looking, to make sense of causes and consequences. Can the candidate apply prior explanations to predict future outcomes? For instance: Analyzing past performance to recommend portfolio decisions.

Quick insights.

An algorithm for arranging desks in shared workspaces. Via Carl Anderson (@LeapingLlamas).

Dominant men decide faster. Are faster decisions better? Yes, for men who use them to emerge as leaders.

How the analytics-obsessed Houston Astros make winning decisions.

Posted by Tracy Allison Altman on 17-Sep-2018.

Photo credits: Henry Gillis on Unsplash