1. Debiasing → Better decisions

Debiasing is hard work, requiring honest communication and occasional stomach upset. But it gets easier and can become a habit, especially if people have a systematic way of checking their decisions for bias. In this podcast and interview transcript, Nobel-winning Richard Thaler explains several practical ways to debias decisions.

First, know your process. “You can imagine all kinds of good decisions taken in 2005 were evaluated five years later as stupid. They weren’t stupid. They were unlucky. So any company that can learn to distinguish between bad decisions and bad outcomes has a leg up.”

A must-have for repeatable, high-quality decision processes. Under “Write Stuff Down,” Thaler encourages teams to avoid hindsight bias by memorializing their assumptions and evaluation of a decision – preventing people from later claiming “I never liked that idea.” (Ed. note: I wonder if this write-it-down approach would make the best, or possibly the worst, marital advice ever. But I digress.)

Choice architecture relates to debiasing. It “can apply in any company. How are we framing the options for people? How is that influencing the choices that they make?” But to architect a set of effective choices – for ourselves or others – we must first correct for our own cognitive biases, and truly understand how/why people choose.

Diversity of hiring + diversity of thought = Better decisions. “[S]trong leaders, who are self-confident and secure, who are comfortable in their skin and their place, will welcome alternative points of view. The insecure ones won’t, and it’s a recipe for disaster. You want to be in an organization where somebody will tell the boss before the boss is about to do something stupid.” [McKinsey Quarterly, May 2018]

2. Controls, placebos → Health app evidence

In Slate, Jessica Lipschitz and John Touros argue that we need a sugar-pill equivalent for digital health. “Without placebos, we can’t know the actual impact of a [health app] because we have not controlled for the known impact of user expectations on outcomes. This is well illustrated in a recent review of 18 randomized controlled trials evaluating the effectiveness of smartphone apps for depression.” When an app was compared with a ‘waitlist’ control condition, the app seemed to reduce depressive symptoms. But when these findings were compared to active controls — such as journaling — the comparative effectiveness of the smartphone apps fell 61%. “This is consistent with what would be expected based on the placebo effect.” Via David Napoli’s very informative Nuzzel newsletter. [Slate Magazine: Why It’s So Hard to Figure Out Whether Health Apps Work]

3. First-year value → Analytics success

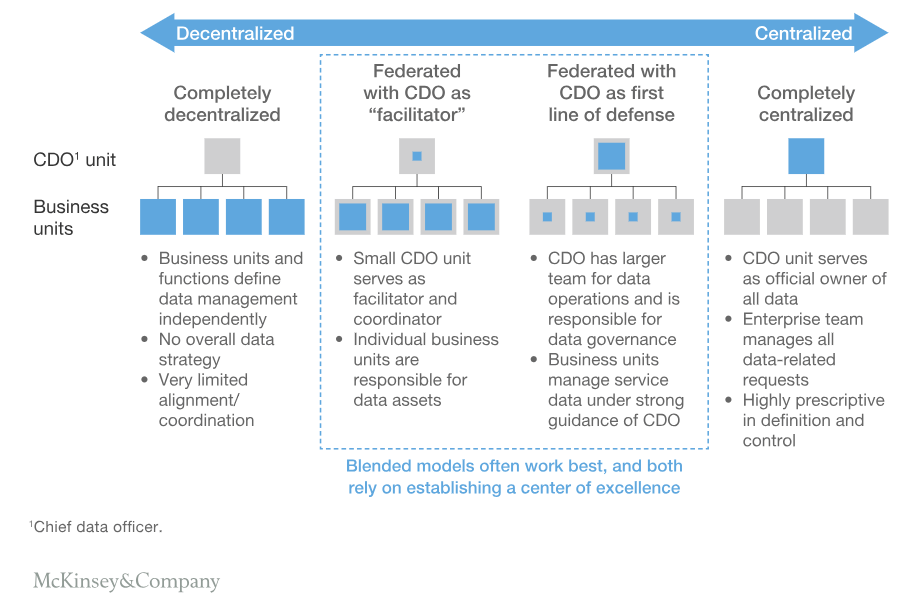

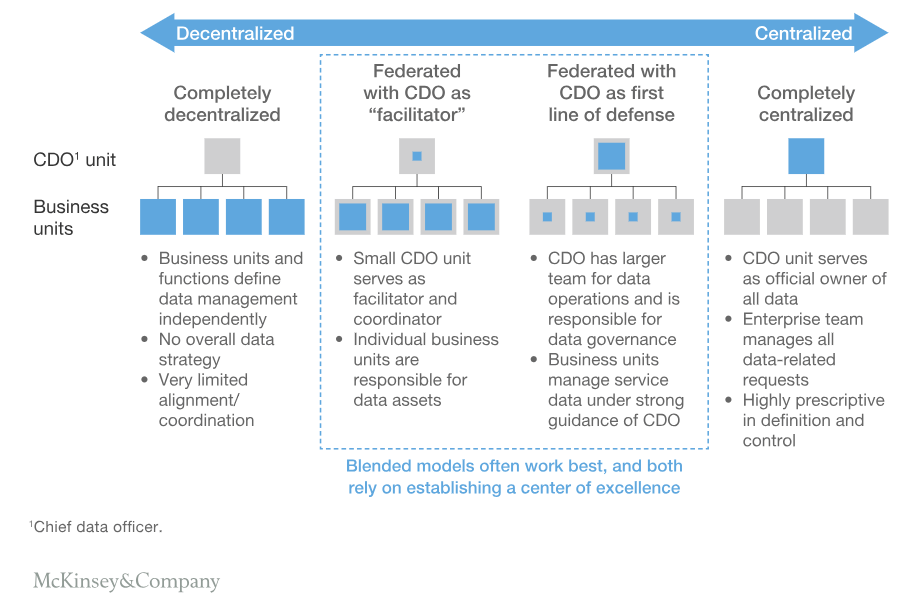

Oliver Fleming et al. describe 10 ways to improve your chances of success. Analytics can’t be isolated from the business. The structure of data teams is crucial: Analytics programs that are either too centralized, or fully decentralized, will underperform those allowing coordinated innovation. “A definite red flag that the current organizational model is not working is the complaint from a data scientist that his or her work has little or no impact and that the business keeps doing what it has been doing.” No point in funding a data model that’s disconnected from the other segments of the company. Also:

Choose use cases wisely. Demonstrating analytics value, ideally during year one, encourages wider adoption across the organization.

Define analytics roles. Carefully defined roles will bring efficiencies and improve talent retention. McKinsey strongly advocates distinguishing between the activities of data architects, data engineers, data scientists, analytics translators, etc. [Ten red flags signaling your analytics program will fail, May 2018]

Quick insights.

– Social programs: Using behavioral science to improve interactions between agencies and parents with children in the system.

– Statistical Thinking: Road Map for Choosing Between Statistical Modeling and Machine Learning.

– Net Promoter Score: NPS debunked as “best” growth predictor? By @bobehayes.

– Yikes. An “analysis of comments in both formal student evaluations and informal online ratings indicates that students do evaluate their professors differently based on whether they are women or men,” remarking on female appearance and personality far more frequently. Empirical evidence shows this “bias does not seem to be based solely (or even primarily) on teaching style or even grading patterns. Students appear to evaluate women poorly simply because they are women.”

Posted by Tracy Allison Altman on

Photo credit: Federico Bottos on Unsplash